79tka Insights

Your go-to source for the latest news and information.

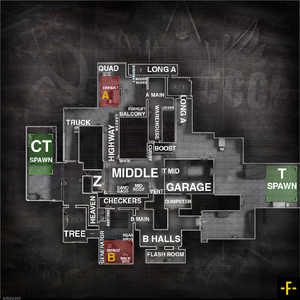

Cache Chronicles: Secrets of CSGO's Timeless Map

Uncover the hidden secrets of CSGO's iconic Cache map! Dive into strategies, lore, and tips that every player needs to know!

The Evolution of Cache: From Concept to Competitive Arena

The concept of cache has evolved significantly since its inception in computing, transforming from a simple mechanism for storing frequently accessed data to a crucial element in optimizing system performance. Initially developed to address latency issues, caches are now integrated into various levels of the computing hierarchy, including CPU, disk, and web caching. As technology progressed, the need for faster data retrieval led to innovative techniques and architectures that improved efficiency and user experience. The transition from physical cache systems to more sophisticated, algorithm-driven methods illustrates the continued importance of caching in modern computing environments.

Today, cache management has entered a competitive arena, where businesses leverage advanced caching strategies to enhance their web applications and services. Techniques such as content delivery networks (CDNs), in-memory caching, and browser caching utilize distributed architectures to deliver content swiftly and reliably. These systems not only reduce server load but also significantly improve page load times and overall user satisfaction. As we look to the future, the role of cache will continue to expand, driving innovation and efficiency in an increasingly data-driven world.

Counter-Strike, a thrilling team-based shooter, continues to captivate players around the world. In its latest iteration, players can enhance their gaming experience with various CS2 Weapon Skins that offer unique aesthetics and personalization options.

Top Strategies for Dominating in Cache: A Tactical Breakdown

In the competitive landscape of cache management, dominating requires a comprehensive approach. One of the top strategies involves optimizing your caching layers. This can be achieved by implementing a multi-tiered caching strategy that prioritizes data based on its access frequency. For example, frequently accessed data can be stored in memory caches, while less critical data can reside in disk-based caches. Additionally, employing tools like Redis or Memcached can significantly enhance retrieval speeds and improve overall system performance.

Another vital tactic in achieving dominance in cache is to regularly monitor and analyze cache performance metrics. Utilizing analytics tools allows you to identify cache hit ratios, latency issues, and potential bottlenecks. By tracking these metrics, you can make informed decisions on clearing stale cache data and adjusting caching rules. Furthermore, consider implementing a cache invalidation strategy that automatically refreshes outdated cache entries, ensuring that your application serves the most relevant data to users.

Why Cache Remains a Fan Favorite: Exploring Its Unique Features

Cache has long been a fan favorite among tech enthusiasts and professionals alike, and it’s easy to see why. One of its most notable features is its ability to enhance performance through rapid data retrieval. Unlike traditional data storage solutions that might slow down operations during high loads, cache stores frequently accessed data in a way that allows for instantaneous access. This means users can experience faster load times and overall improved performance, whether they are running complex applications or browsing websites.

Another aspect that makes cache stand out is its flexibility. It can be implemented in various formats, including memory caches, disk caches, and even distributed caches, allowing organizations to choose the solution that best fits their needs. Furthermore, the ability to customize caching strategies means that developers can optimize applications for different workload patterns, significantly improving efficiency. These unique features make cache not only a practical choice but also a vital component in modern computing systems.